How to plan and conduct user interviews

⭐️ Key takeaways

- A user interview is a 1-on-1 conversation between a UX researcher (aka you in this case) and a participant.

- To conduct a user interview, you write a script with your research questions and then use that script to talk to participants. It’s one of the cheapest, simplest, and most common methods employed in user research.

- User interviews help you gain an understanding of your users, who they are, their goals, what they like about the product, what they want from your product, their pain points with the product, and many other things. User interviews are important because they give you insights into the needs and deep motivators of your users.

- User interviews can range from 15 minutes to an hour and a half. Most interviews we conduct are around 15-45 minutes.

- One of the best parts of user interviews is that you don’t need to conduct them with dozens and dozens of participants to get statistically significant answers. Usually, you only need to talk to 4-6 participants who represent your audience to see trends and gain insights.

- The structure of a user interview has three sections, the introduction, the interview questions, and the wrap-up.

- ~The introduction is a pre-written script that you read to each participant to frame the purpose of the interview and set the expectations for the session.

- ~Then come the questions, where you ask participants everything you need to know. This is the meat of the user interview.

- ~And the wrap-up is the script where you read closing remarks to the participants and ask if they have any questions for you.

- User interviews can be conducted during any stage of the UX process. That said, they’re most effective in the beginning stages when you’re still defining the problem and figuring out the general design direction.

- Before conducting user interviews, you need to do 6 things:

- ~Define your interview goals

- ~Create your interview questions

- ~~Make sure to avoid closed questions and leading questions

- ~~Instead, ask open, non-leading questions

- ~Build your script and discussion guide

- ~Test your equipment

- ~Recruit participants

- ~And conduct a dry run

- Dos and don'ts of user interviews:

- ~Do ask to record the session and let them know how you’re going to use this information. Don’t assume that it’s okay just to record someone. That can become a serious legal problem.

- ~Do focus on the participants. Don’t focus on note-taking. You’ll always have the recording to go back to. Like we mentioned previously, you can also use a transcription service such as Otter.ai to automatically transcribe the notes for you.

- ~Do ask seemingly simple questions. Don’t make assumptions.

- ~Do make your participant feel comfortable.

- ~Be okay with silence.

- ~Do keep an eye on the clock to make sure you don’t run over the session time. Don’t continue over time without getting their permission first.

- ~Be neutral. Don’t provide expressions or mannerisms that the participant can react to.

- ~Be open. Be curious. Show that you’re genuinely interested in what they are saying. Don’t act like their responses aren’t interesting. If participants feel like you’re bored with their answers, they’ll stop talking.

- 4 drawbacks of user interviews

- ~People tend to say they’ll do one thing when they’ll actually do another.

- ~Your interview will only be as good as your participants’ memory.

- ~You might hear a bunch of noise. There’s a good chance your users will ask for random product features. Many times that’s just noise.

- ~Interviews aren’t the best platform for asking users if they want a specific piece of functionality. Most users will tell you “yes, I want that shiny new thing”, even if they won’t use it or if it’s not that helpful.

📗 Assignment

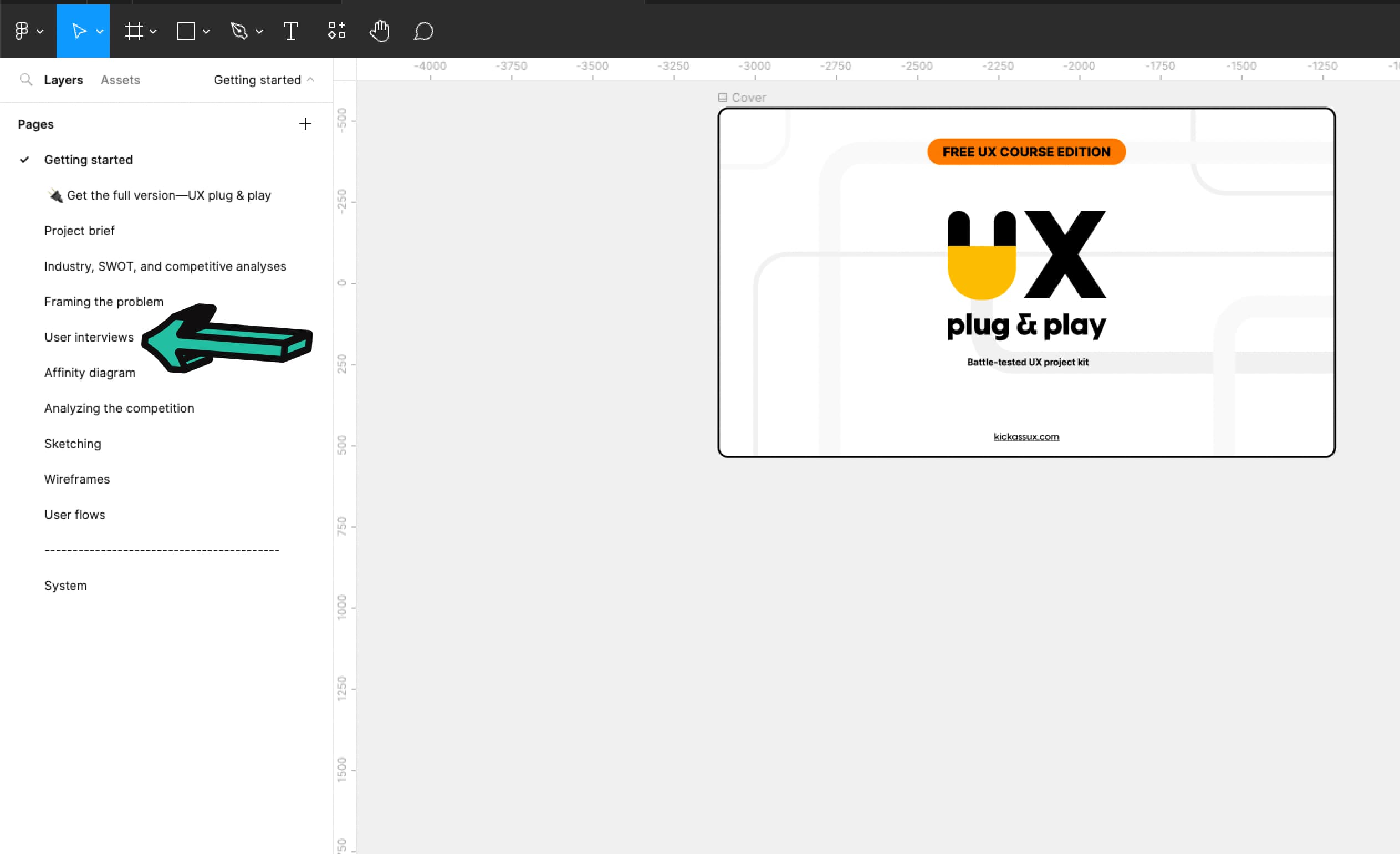

Go to the User interviews tab in the Figma workbook.

Then, complete analyze the interviews and write your top takeaways for REI (brought up in the project brief)

💬 Transcript

Conducting user interviews is an essential part of the UX process.

But it's one of the trickiest methods to master.

In this video, we're going to talk about how to successfully conduct user interviews.

So buckle up /because it's going to be an intense, in-depth ride.

Let's go.

This is really going to be a beefy lesson. We'll cover what user interviews are and why they're important, when to conduct them, how to prepare for them, how to conduct user interviews, the do's and don'ts of user interviews, the drawbacks of user interviews, and what to do after you finished conducting them.

By the end of this lesson, you'll have everything you need to plan and run user interviews.

As always, before we begin, and so you can follow along, go download our course materials at kickassux.com under our free UX course page.

Now that that's out of the way, let's get after it.

There have been times in our careers where we were absolutely certain that users of our product had specific beliefs and behaviors.

It was only after we interviewed them that we realized we had it wrong and that they believe something else entirely.

Had we continued blindly with our assumptions, we would have designed the . Wrong thing.

Instead, we learn what we needed to design by talking directly to users.

So with that, let's talk about what user interviews are and why they're important.

A user interview is a one-on-one conversation between a UX researcher, AKA you in this case, and a participant.

To conduct a user interview, you write a script with your research questions, and then you use that script to talk to participants. That's it.

It's one of the cheapest, simplest, and most common methods employed in user research.

User interviews help you gain an understanding of your users, who they are, their goals, what they like about the product, what they want from your product, their pain points with the product, and many, many other things.

Basically, users will tell you their opinions and perceptions of an experience.

User interviews can range from 15 minutes to an hour and a half.

Most interviews we conduct though are around 15 to 45 minutes.

One of the best parts of user interviews is that you don't need to conduct them with dozens and dozens of participants to get statistically significant answers.

Usually you only need to talk to four to six participants who represent your audience to see trends and gain insights.

The structure of a user interview has three sections.

The introduction, the interview questions, and the wrap-up.

The introduction is a pre-written script that you read to each participant to frame the purpose of the interview and set expectations for the session.

Then comes the questions where you ask the participants everything you need to know.

This is the meat of the interview.

And the wrap-up is the script where you read closing remarks to the participant and ask if they have any questions for you.

User interviews are important because they give you insights into the needs and deep motivators of your users.

They help you better understand people's behaviors and their choices.

They also help validate assumptions, answer research questions, and give you empathy for the user.

Conducting user interviews directly helps you figure out the design direction.

It's fair to say that without them creating user-centered designs can be like you're shooting in the dark.

We've covered what user interviews are. Let's now talk about when to conduct them.

User interviews can be conducted during any stage of the UX process.

That said they're usually most effective in the beginning stages when you're still defining the problem and figuring out the general design direction.

This way, it's still cheap and easy to change your approach based on user feedback.

User interviews are also used during the middle stages of the process or after usability testing to gather users opinions about an existing product or design.

It's best to conduct user interviews in the beginning of your process, if possible.

This way you can uncover and validate your assumptions upfront before you sink too much time into going in the wrong direction.

Now let's cover how to prepare for user interviews.

There are five primary things to do.

Define interview goals, create your interview questions and work them into the script slash discussion guide, test your equipment, recruit participants, and conduct a dry run.

First before diving into interviews, you need to have a solid reason for doing it.

You shouldn't be doing them just because you think you ought to.

That's a waste of time and shows that you don't know how to critically approach the problem.

Truly think about why you're going to conduct user interviews.

What are you hoping to learn? These are your interview goals.

Normally, you'd meet with stakeholders to identify what you need to learn together.

Many times, the reason you decided to conduct user interviews is to validate the problem you've identified during framing the problem.

You also conduct user interviews to answer any questions that came up during framing the problem.

That said there could be many, many reasons for running user interviews.

So make sure to fully define your interview goals before starting.

Next, create your interview questions and add them to a script.

We added a sample user interview script that you can download directly from our Figma workbook.

So if you haven't already make sure to download it from our site, at kickassux.com under our free UX course page.

Coming back around. If you frame the problem, you already have all the research questions in one place.

So now you just need to format them to fit your interviews.

With that in mind, what's the first rule of user interviews?

Don't ask closed questions.

What's the second rule of user interview questions.

Don't ask close questions.

A closed question is a question where the only answers are "yes" or "no. " The participant has no room to elaborate or tell you how they really feel.

For example, let's say you worked for Alaska airlines and wanted to understand if people felt comfortable purchasing flights on their mobile app.

A closed question would be something like, " would you purchase an airline ticket on your mobile app?"

The only answers are. Yes or no.

Don't do this.

Instead, ask open questions.

Open questions invite a greater response than just a yes or no answer.

They allow the user to fully explain their thoughts and opinions.

They also help you get to the reason behind why someone does something.

With that in mind,

A better way to ask the question would be something like, " can you tell me your thoughts about using your mobile phone to order airline tickets?"

Also, make sure to avoid leading questions.

Leading questions bias the participant towards a certain answer, which will skew the results of your interviews.

Using the same Alaska airlines example, a leading question could be, " which apps do you use to purchase airline tickets?"

This question assumes that they use mobile apps to purchase airline tickets and directs their focus only towards the mobile space rather than more broadly.

Again, don't do this.

Instead, ask non-leading questions.

These don't push that participant towards a specific response.

A better way to ask that question would be, "how do you currently purchase airline travel?"

In general, ask questions that are open and non-leading, meaning they invite a greater response than just a yes or no and don't bias their answer.

Before, moving to the next thing, here are a few last tips for creating your interview questions.

Tip number one. If possible, create more questions than you'll have time for.

This ensures that you'll have something to talk about if the interview goes quickly.

Tip number two. With that previous tip in mind, ask the most important questions first just in case you run out of time.

And the final tip. Have followup questions or phrases prepared after your questions.

Moving on.

The third thing left to do is test your equipment.

This can't be stated enough.

It's super frustrating to show up to a user interview only to find out that you're having technical difficulties.

Not only does it make your job harder and waste valuable time, it also doesn't make you look very professional to the participant.

And they're less likely to provide insightful responses.

You don't need fancy microphones, cameras, or screen recorders to get the job done.

What you already have on your computer and phone should be enough.

Just be prepared for the environment you're recording in.

After testing your equipment, it'll be time to start recruiting.

In an ideal world, you'd already have started recruiting before any of this because recruiting takes time.

But when you're first starting out, being confident in your process and what you've created is really important.

That's why we waited to talk about recruiting until now.

Finding the right participants is critical for gaining valuable insights and results from research.

Unfortunately, it can be challenging to recruit the right people to participate in your research.

There are a few reasons for that.

First, there are just many people who don't have the time.

Second, people you recruit need to represent the users of your product.

If they don't represent your users, then your results won't provide you with the actionable insights to truly solve the user's needs.

With that in mind, it's important to build criteria of who to recruit for your research.

The attributes of the people you're recruiting can be broad or highly specific, depending on what you're looking to learn.

Here are a few general rules to keep in mind for your criteria.

First don't recruit people you know personally. Their responses can be biased just because they know you.

But in a pinch, they can work.

If you know, someone who uses your product and you can't find anyone else that you don't know, you can bend that rule.

Second, as much as possible recruit people who represent your users or potential users.

List the attributes of the people you want to get feedback from.

Are they current users?

Are they new users?

Have they ever used your product?

Do they use a product like yours?

How tech savvy are they?

Et cetera.

Building successful recruiting criteria can be tricky.

If you define the criteria too broadly, you might end up with people who don't really represent your users.

If you're too specific, you might accidentally weed out good candidates and not be able to find people to participate.

Try building criteria that are the right balance between broad and specific.

Research criteria include two categories, mandatory and optional.

Mandatory means that the person fits every single item listed.

With that in mind, list everything you know that needs to be true about the people you're going to interview. You don't want anyone who doesn't fit all the mandatory criteria.

For example, imagine you were working on a discount app for supermarkets.

Here are a few things that could be part of the mandatory criteria. These participants must go to the supermarket at least once a month.

And they must shop at general supermarkets like Safeway, Fred Meyer, Kroger trader Joe's, HEB, Albertsons, Walmart target, et cetera.

Optional criteria include other attributes that might be true of your target audience.

These are assumptions and might not matter to people who use the discount supermarket app.

It's good to include some of the questions from the optional criteria at the beginning of an interview so you can learn more about the target audience, what they prefer, and what their actions look like.

Back to the supermarket discount app, as an example.

Here are a few things that could be part of the optional criteria.

These participants don't shop at Whole Foods. Those who shop at Whole Foods are typically willing to pay full price (and then some).

That means they're probably less likely to use a discount shopping app.

These people also currently use coupons when they go shopping.

And they use apps like Groupon or living social.

Next, create your screener by turning your criteria into questions.

A screener is a list of questions that help you identify your target audience and weed out those who don't fit your criteria.

Getting back to the supermarket discount app, as an example, here's what our screener could look like.

Do you shop at a supermarket? If no end the interview.

How often do you shop at the supermarket each month? If zero, end the interview. That's it.

These two questions came from the mandatory criteria and will eliminate people who do not fit in the target audience.

In this same example, it's also good to include some of the optional criteria as well just to learn more about the audience.

This might look like, "which supermarkets do you shop at most often?

Do you use coupons when you shop? If yes, how often.

Do you use apps like Groupon or living social?"

There are a few ways you can actively use your screener to weed people out of your research.

First, if you're reaching out to potential participants through email, social media, or your own network, you can build your screener into your message.

With the supermarket app example, you might put out something on your social media accounts saying something like, " I'm conducting research about a new supermarket app that helps you find the cheapest prices on products.

If you shop at supermarkets like Safeway, Kroger, Fred Meyer, Walmart ,or target, at least once a month, I would love to talk with you."

Second, you can use a screener in real time when you talk directly to someone during an interview or test.

You can do this at the beginning of your session.

If they don't pass your screener, you politely let them know that they don't fit the parameters of your test, thank them for their time, and send them on their way.

The last way to use your screener is during a remote survey or usability test.

The screener would be at the beginning and, depending on the responses, the software would then either let them proceed or it would send them to an exit page where it tells them they didn't fit the criteria.

As a side note in order to do this, your survey or usability testing tool would need to have a screening feature or conditional logic built into its functionality.

Now let's talk about the different ways you can find your participants.

We'll break these down into two categories.

First we'll cover the free or inexpensive methods.

Second we'll show you the other ways you might recruit participants when employed as a UX designer, when you have a bigger budget.

Let's start with the first category free or inexpensive methods.

First is your own network. Think about your friends, family, Twitter, Facebook, LinkedIn, et cetera.

Use whatever works to find people who fit your criteria.

Second would be using services such as Amazon Mechanical Turk.

The advantage is that you'll interview people you don't know which means you're less likely to bias participants.

While you do have to pay for Amazon mechanical Turk, this is a fast and cheap way to get great results.

For example, you can easily get 20 survey results that require about one to two minutes to complete for a total of $3 to $6.

Or you can complete five, 15-minute interviews for a total of $10 to $15 dollars.

Within Amazon mechanical Turk, you can create screeners and target specific demographics to ensure that you're attracting the right type of user.

The third inexpensive/free method, snowball recruiting.

This means at the end of a user interview or usability test, you ask if they know anyone else who would like to participate.

Last in the cheap / inexpensive category, guerilla recruiting.

This is where you go to a coffee shop or store and ask people to participate in a five to 15 minute conversation or test.

You usually offer an incentive, like a $5 gift card to Starbucks.

There are three main downsides of guerrilla testing.

One, it takes some practice and confidence to approach people in the wild.

Two, You might end up standing around and waiting for people to show up.

Three, you might not end up with people who fit your criteria because you can't be too picky about who agrees to talk to you.

Now let's talk about methods that require a larger budget.

First, online ads. You can put out ads online through whatever ad medium you'd like to use.

This usually works best for surveys.

Using platforms like Facebook allows you to be hyper-targeted with who you recruit, but you risk paying for people who click your ad and don't actually sign up.

Depending on the ad platform you use, you might end up with people who don't represent your user.

Second, you can choose a research participant recruitment agency. This is a really efficient way to recruit people, but it also costs a lot to do.

This last method is one of our favorites because of how easy it is, but it really only applies when you're employed as a UX designer.

Basically you work with someone on your team, typically from marketing support or sales, to get emails of people who are using the product.

You then reach out to them directly to sign up for research.

You can also work with someone from marketing to send out a blast email to the entire list to ask for participants.

Next, let's talk about gaining participants consent for research.

This is actually a lot easier than it sounds.

All you have to do is get them to sign a consent form, which is a document that says they agree to participate in the study.

This protects you, and eventually the company you work for, from someone who thinks they deserve credit or financial compensation above and beyond any incentives you provide for their help in your study.

We recommend looking up research consent forms on Google and copying one for your own use.

Now that we've talked through the different ways to find participants and gaining their consent, let's talk about incentives.

Incentives are just what they sound like.

They are a form of reward or financial compensation to entice people to participate.

The size of reward usually depends on the method you use as well as the amount of time the research

If you're conducting five guerilla tests at a coffee shop that each last 15 minutes, we recommend providing an incentive of $5 to $10 per test. That works out to around 25 to $50 in total.

If you're conducting your interview is using Amazon mechanical Turk, those same five interviews that require 15 minutes to complete would only cost you anywhere between $10-15 in total. That's $2 to $3 per test.

That said, you can start by providing no incentives at all and see people agreed to participate.

When you were employed as a UX designer, it's not unheard of to provide somewhere between $50 to $200 to someone, depending on the amount of time it takes and the complexity of the research.

It's important to point out that there are other things you can provide in return for someone's help other than just straight cash.

You just have to ask yourself, "what can I offer that would make it worth that person's time?"

So if you're on your own or working for a company without a budget, you can still offer incentives.

You'll just have to be creative.

And there are plenty of folks who are just willing to help for free.

All in all, when it comes to recruiting use, whatever works best for you to find the right people to talk to.

Just do your best to find at least four to six participants.

Once you've added your interview questions to the script, tested your equipment, and recruited your participants, finally, you'll conduct a dry run with a friend or family member.

This will help you practice your delivery and smooth out any of the kinks in the script and questions.

Do everything like you would for a real interview.

This means that you'd start by reading the introduction script, recording the session, and asking them all of your questions even if you already know their answers.

This will help you get a sense of how long it takes, if everything is worded correctly, and how it flows.

If you're running remote sessions, now's a great time to make sure that you won't have any technical difficulties.

After your script is ready and your participants are recruited, all you have left to do is run each user interview.

So let's talk about how to run one for yourself

Remember each user interview has three parts, the introduction, the questions, and the wrap-up.

With this in mind, the first thing you do is read out the introduction script to the participant.

It's the exact same intro for each participant, which helps eliminate biases because you begin the session the same way every time.

The introduction script does six things.

First ,it sets the participants expectations for the session and helps them get comfortable talking with you.

Second, it informs them why you're conducting the interview.

Third, it tells them to only share their opinions not what they feel others believe.

Fourth, it assures them that there are no right or wrong answers and that you're not testing their knowledge. This is so important because it helps them express their opinions without feeling like their intelligence is being questioned.

Fifth, it frees them up to be as honest as possible.

Sixth, it gets their permission to record the session and assures them that the recording will only be used internally for your notes and nothing

After the introduction, you'll start asking the interview questions.

Ask each question one by one and take notes on their responses.

Don't be too focused on your notes though, or you might miss something. You'll always have the recording you can analyze later.

Also, don't be afraid to go off script.

Many times your participants will dig into a topic that you didn't account for.

That's awesome.

Take advantage of it.

You can prompt the user to tell you more by asking, can you tell me a bit more about that?" Or even just "why?"

You'll be surprised how much you can learn from doing that.

Finally, after your questions, you'll wrap up the session by asking them if they have any questions for you and thanking them for their time.

Now, let's cover some do's and don'ts of user interviews.

Do ask to record the session and let them know how you're going to use that information.

Don't assume that it's okay just to record someone. That can become a serious legal problem.

Do focus on the participants.

Don't focus on note taking. You'll always have the recording to go back to.

We highly recommend using a service like dovetail to transcribe and analyze your results.

You can find a link in the description for dovetail.

Do ask seemingly simple questions. Don't make assumptions.

Sometimes you'll assume that you shouldn't ask a basic question because you think you know the answer.

However, you don't truly know the answer. So ask the

Do make your participants feel comfortable. It's always a good idea to start out interviews asking open-ended questions about them to get them warmed up and comfortable talking with you.

Be okay with silence.

This can be hard for some folks, but this is crucial.

Doing this gives the user space to say what they want without being interrupted.

Additionally periods of silence put pressure on them to pipe up.

Some of the best research findings come from a few seconds of silent reflection from the participant.

Don't try to fill in the silence. Just ask your question and listen.

Do you keep an eye on the clock to make sure you don't run over to the session time.

Don't continue over time without getting their permission first. Continuing past the agreed upon time is poor form.

It shows a lack of respect for the participant's time.

For interviews using Amazon Mechanical Turk, avoid going over at all costs.

Be neutral. Don't provide expressions or mannerisms that the participant can react to.

You can bias the participant by not doing this properly.

They can change how they respond based on how you're acting. So be friendly, but be as neutral as possible.

Be open, be curious. Show that you're genuinely interested in what they're saying. Remember, you're learning from them. You're the student, they're the teacher.

Don't act like their responses aren't interesting.

If participants feel like you're bored with their answers, they'll stop talking.

If someone tells you something interesting that isn't in the script, go with the flow. You might find some hidden gems.

Moving on, let's talk about some drawbacks of user interviews.

First, people tend to say they'll do one thing when they'll actually do another.

This comes back to what we talked about in the intro to research and understanding video with attitudinal versus behavioral research.

User interviews are inherently attitudinal (what they say they'll do) as we don't observe them behave the way they would when they're actually using our product without us around.

This can potentially skew our results.

For example, let's say you're interviewing users about a dieting app.

They might say they'd love to use it, but in actuality, they rather sit on the couch with a pizza and wouldn't download your app in the first place.

This can sometimes give you false positives.

Second, your interview will only be as good as your participant's memory.

There's a good chance that you're a participant won't remember everything about what you ask them.

This means that you'll get incomplete data and not get all the details you were looking for.

Plus when it comes to biases, people might try to fill in their memory gaps with information even if it's not true because they don't want to disappoint you or come across like they're not smart.

Research biases are definitely a thing and you'll see them play out here.

Third, you might hear a bunch of noise.

There's a good chance that your users will ask for random product features.

Many times that's just noise.

However, if you hear the same thing from multiple people, then the request might be worth looking into.

Fourth, interviews aren't the best platform for asking users if they want a specific piece of functionality.

Most users will tell you, "yes, I want that shiny new thing."

Even if they won't use it, or if it's not that helpful.

They think they want it (attitudinal), but in reality, they won't actually use it (behavioral).

Finally, let's talk about what to do when you finished conducting a user interview with a participant.

There's a section below our user interview script template that's called the top five takeaways in our Figma workbook.

Right after each interview, we recommend going to that section and writing down what you believe to be the most important insights you learned during that interview.

You'll also want to pull out direct quotes from your interviews that provide those insights.

Repeat this for each user interview you conduct.

At the end, you should have four to six user interviews completed.

And that's it for this video.

I know we've covered a lot. We've come a long way.

So here's the recap.

A user interview is a one-on-one conversation between a UX researcher and a participant that provides insights into the needs and deep motivators of users.

Interviews can range from 15 minutes to an hour and a half. And we recommend aiming for 15 to 45 minutes sessions.

You only need to conduct user interviews with four to six participants to gain insights.

To effectively prepare for user interviews you need to define your interview goals, create your interview questions, build your script and discussion guide, test your equipment, recruit participants, and conduct a dry run.

We reviewed do's and don'ts such as being okay with silence.

And once you're done with an interview, write out your top five takeaways from the interview.

In the future, when you're employed as a UX designer, ask other stakeholders to listen into interviews you conduct.

It'll help the entire team get on the same page and gain empathy for users.

Plus, it's really awesome when other teammates are taking notes so you all can compare your insights.

So now it's time for the course assignment.

When you open up the Figma workbook and go to the user interviews tab, you'll notice that we did all the hard work for you and already conducted five user interviews.

So now all you have to do is read through all the five transcripts.

Then below each transcript, write down your top five most important takeaways from each interview, as well as other notes and observations.

Below the first two participants takeaways, you'll notice that we have two transparent screens that show you our takeaways from each interview.

We recommend you don't look at them until you've done it for yourself.

Then when you've finished doing it on your own, you can go and see how your work compares.

You got this.